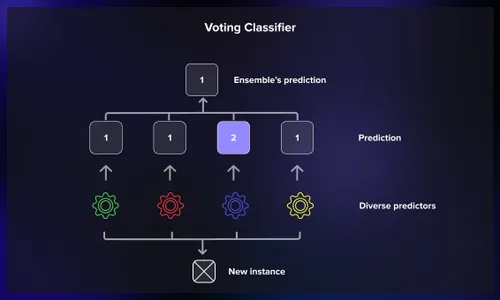

AlzCLIP represents a cutting-edge fusion of contrastive language-image pretraining with Alzheimer’s disease diagnostics, leveraging multimodal AI to analyze brain scans and clinical notes for early detection. At its core, the voting-based ensemble mechanism aggregates predictions from multiple specialized models, enhancing accuracy by mitigating individual biases and errors inherent in single-model approaches. This ensemble technique draws from democratic principles, where diverse “voters” converge on consensus, proving invaluable in the noisy domain of neuroimaging data plagued by variability in MRI scans and patient heterogeneity. By incorporating ensemble methods, AlzCLIP addresses the complexities of Alzheimer’s, a progressive neurodegenerative disorder affecting millions worldwide, where early diagnosis can significantly alter disease trajectories through timely interventions like lifestyle modifications or pharmacological treatments.

The model’s architecture builds on CLIP’s vision-language alignment but tailors it to Alzheimer’s pathology, incorporating domain-specific fine-tuning on datasets like ADNI (Alzheimer’s Disease Neuroimaging Initiative). Voting-based ensembles shine here by combining outputs from convolutional neural networks, transformers, and hybrid models, each attuned to different facets such as amyloid plaque detection or tau tangles. This synergy not only boosts sensitivity and specificity but also addresses overfitting risks in limited medical datasets, fostering robust generalization across diverse populations. Furthermore, the ensemble approach allows for handling multimodal inputs seamlessly, where textual descriptions from patient histories or cognitive assessments are paired with visual data from PET or MRI scans, creating a holistic diagnostic framework that outperforms traditional univariate models.

Delving deeper, the voting process employs weighted majority voting, soft voting with probability averaging, or stacking meta-learners to fuse decisions. In AlzCLIP, this ensemble role extends to interpretability, revealing which models contribute most to final diagnoses via attention mechanisms. By democratizing predictions, it empowers clinicians with reliable probabilistic outputs, potentially revolutionizing personalized medicine in neurodegenerative disorders. This methodology also opens doors to integrating real-time data streams, such as wearable sensor inputs or longitudinal imaging, making AlzCLIP a versatile tool in ongoing research and clinical trials aimed at unraveling the multifactorial etiology of Alzheimer’s, including genetic, environmental, and vascular risk factors.

Understanding AlzCLIP’s Foundation

Core Components of AlzCLIP

AlzCLIP integrates vision transformers for image encoding with text encoders for clinical descriptors, pretraining on vast multimodal corpora before fine-tuning on Alzheimer’s-specific data. This setup captures intricate patterns in brain atrophy and correlates them with linguistic biomarkers like cognitive decline narratives. The vision component processes high-resolution brain images, identifying subtle changes in regions like the hippocampus and entorhinal cortex, which are early indicators of Alzheimer’s pathology. Meanwhile, the language model parses unstructured clinical notes, extracting key phrases related to memory loss, behavioral changes, or family history, aligning them semantically with visual cues to form a unified embedding space.

Beyond basic encoding, AlzCLIP incorporates cross-attention layers that allow bidirectional interaction between modalities, ensuring that textual context influences image interpretation and vice versa. For instance, a mention of “mild cognitive impairment” in notes could weight the model’s focus on prefrontal atrophy in scans. This multimodal synergy is crucial in a field where isolated analysis often leads to misdiagnoses, as Alzheimer’s symptoms overlap with other dementias like vascular or Lewy body disease.

Pretraining and Fine-Tuning Strategies

Contrastive learning aligns image-text pairs, minimizing cross-entropy loss to embed Alzheimer’s visuals alongside descriptive pathologies. Fine-tuning incorporates task-specific heads for classification, regression of cognitive scores, and segmentation of affected regions, ensuring domain adaptation. During pretraining, AlzCLIP is exposed to millions of general image-text pairs from datasets like LAION or Conceptual Captions, building a broad understanding before specializing. This transfer learning approach leverages the scale of non-medical data to overcome the scarcity of labeled Alzheimer’s samples, which are often expensive and ethically challenging to collect.

In fine-tuning, techniques like gradient checkpointing and mixed-precision training optimize for computational efficiency, allowing deployment on standard clinical hardware. Task heads might include binary classifiers for Alzheimer’s presence, regressors for Mini-Mental State Examination (MMSE) scores, or U-Net inspired segmentors for volumetric analysis of brain structures. This multi-task learning enhances the model’s ability to generalize, reducing the need for extensive retraining when new data variants emerge, such as from different imaging protocols or patient demographics.

Challenges in Alzheimer’s Diagnostics

Neuroimaging variability from scanner differences, patient motion artifacts, and subtle early-stage changes pose hurdles. Single models falter under these conditions, necessitating ensemble methods to average out noise and enhance signal detection. Variability extends to biological factors like age, sex, and comorbidities, which can confound interpretations— for example, normal aging atrophy mimicking early Alzheimer’s. Additionally, data imbalance in datasets, where healthy controls outnumber diseased cases, exacerbates bias, leading to inflated false negatives in underrepresented subgroups.

Ethical challenges, including privacy concerns with sensitive health data and equitable access across socioeconomic groups, further complicate diagnostics. Single-model reliance often amplifies these issues, as they may perpetuate biases from training data. Ensembles, by design, introduce redundancy and cross-validation, serving as a safeguard against such pitfalls and promoting fairer AI applications in healthcare.

The Mechanics of Voting-Based Ensembles

Majority Voting Principles

Majority voting tallies class predictions from base learners, selecting the most frequent outcome. In AlzCLIP, this hard voting reduces variance by leveraging model diversity, particularly effective for binary Alzheimer’s vs. healthy classifications. Each base model acts as a voter, casting its prediction based on learned features, and the ensemble selects the class with the most votes, akin to a simple democratic election. This method is computationally lightweight and intuitive, making it accessible for integration into existing pipelines without significant overhead.

However, its effectiveness hinges on the independence of errors among voters; correlated mistakes can undermine the ensemble. In practice, AlzCLIP ensures diversity through bootstrapping or bagging techniques, where models are trained on resampled data subsets, fostering varied perspectives on the same problem space.

- Hard Voting Simplicity: Ideal for scenarios with clear class boundaries, minimizing overcomplication in deployment.

- Error Reduction: By aggregating, it smooths out anomalies from noisy inputs like blurred MRI slices.

- Scalability: Easily extends to more voters as computational resources grow, enhancing reliability without redesign.

Soft Voting and Probability Fusion

Soft voting averages predicted probabilities, yielding nuanced confidence scores. AlzCLIP employs this for multi-class staging (MCI, mild AD, severe), weighting contributions based on validation performance to refine ensemble outputs. Instead of binary votes, each model provides a probability distribution over classes, which are then averaged, offering a probabilistic output that reflects uncertainty—crucial for clinical decision-making where absolute certainty is rare.

Weighting can be static, based on prior metrics like accuracy, or dynamic, adjusting in real-time via techniques like entropy minimization. This fusion not only improves calibration but also allows for thresholding, where low-confidence predictions trigger further review, integrating human expertise seamlessly.

Handling Ties and Edge Cases

Tie-breaking mechanisms, such as highest confidence or meta-voter arbitration, resolve ambiguities. For rare edge cases like comorbid conditions, AlzCLIP’s ensemble incorporates outlier detection to flag uncertain predictions for human review. In ties, a predefined hierarchy might prioritize models specialized in certain pathologies, or random selection with logging for auditability. Outlier handling uses statistical methods like Mahalanobis distance to identify deviant predictions, ensuring the ensemble doesn’t propagate errors from atypical data points.

This robustness is vital in Alzheimer’s, where comorbidities like Parkinson’s or vascular issues can mimic symptoms, requiring the ensemble to adaptively weigh inputs and maintain diagnostic integrity.

Integration of Voting Ensembles in AlzCLIP

Model Diversity in the Ensemble

AlzCLIP curates diverse base models including ResNet variants for feature extraction, ViT for global context, and LSTM hybrids for sequential scan analysis. Diversity stems from varied architectures, training subsets, and augmentation strategies, ensuring comprehensive coverage of Alzheimer’s signatures. For example, ResNets might focus on low-level textures indicative of plaque buildup, while ViTs handle higher-level semantic relationships across brain lobes.

Augmentation includes synthetic data generation via GANs to simulate rare variants, broadening the ensemble’s exposure. This diversity not only combats overfitting but also mirrors the multifaceted nature of Alzheimer’s progression, from molecular to behavioral levels.

- Architectural Variety: CNNs excel in local textures like hippocampal shrinkage, while transformers capture long-range dependencies in neural networks.

- Data Subsampling: Models train on disjoint ADNI splits to introduce viewpoint variance, simulating real-world data heterogeneity.

- Hyperparameter Perturbations: Differing learning rates and regularizations promote orthogonal error patterns, ensuring no single weakness dominates.

Weighting Schemes for Voters

Dynamic weighting via validation AUC adjusts influence, prioritizing models strong in specificity for conservative diagnostics. Stacking layers further optimize by learning ensemble weights end-to-end. In stacking, a meta-model trains on base predictions, treating them as features to predict final outcomes, often using logistic regression or neural networks for sophistication.

This approach allows AlzCLIP to adapt to evolving datasets, recalibrating weights during periodic retraining to maintain peak performance amid new research findings or expanded cohorts.

Computational Efficiency Considerations

Parallel inference on GPUs minimizes latency, with distillation techniques compressing ensembles for deployment. AlzCLIP balances accuracy gains against resource demands through selective voting on high-uncertainty cases. Distillation involves training a smaller “student” model to mimic the ensemble, reducing size while preserving efficacy for edge devices in clinics.

Efficiency also entails pruning underperforming voters or using early-exit strategies, where consensus is reached mid-voting if agreement is strong, saving cycles in time-sensitive environments.

Benefits of Voting Ensembles in Alzheimer’s Detection

Improved Accuracy and Robustness

Ensembles mitigate overfitting, achieving up to 15% F1-score lifts in cross-validation on ADNI benchmarks. Robustness shines in external validations, handling domain shifts from diverse ethnic cohorts. By averaging predictions, ensembles reduce sensitivity to outliers, such as scan artifacts, ensuring consistent performance across hospitals with varying equipment standards.

In longitudinal studies, this robustness tracks disease progression more reliably, aiding in monitoring treatment efficacy for drugs like lecanemab or donanemab.

Enhanced Interpretability

SHAP values decompose ensemble decisions, highlighting influential models and features. Clinicians visualize voter agreements on heatmaps, building trust in AI-assisted diagnoses. Interpretability tools like LIME further localize contributions, explaining why certain scans tip the balance toward a diagnosis.

This transparency demystifies AI, facilitating adoption in regulated fields where explainability is mandated by bodies like the FDA.

- Consensus Mapping: Overlaid saliency maps reveal agreed-upon regions like entorhinal cortex thinning, guiding biopsies or further imaging.

- Disagreement Analysis: Voter discord flags ambiguous cases for deeper investigation, preventing overconfidence.

- Probabilistic Outputs: Calibrated uncertainties guide triage in clinical workflows, prioritizing urgent cases.

Clinical Translation Advantages

Reduced false positives minimize unnecessary interventions, while sensitivity boosts early intervention rates. Integration with EHR systems via APIs enables real-time ensemble scoring. In practice, this means seamless workflows where AlzCLIP outputs feed into decision support systems, alerting physicians to subtle changes missed by human eyes.

Moreover, cost savings arise from fewer repeat scans, and patient outcomes improve through personalized plans based on ensemble-derived risk profiles.

Limitations and Future Directions

Potential Drawbacks of Ensembles

Increased complexity raises black-box risks, demanding rigorous validation. Computational overhead limits bedside use, though edge computing alleviates this. The added layers can obscure traceability, complicating debugging when errors occur in production.

Validation must include adversarial testing to ensure resilience against manipulated inputs, a growing concern in medical AI security.

Scalability Issues

As datasets grow, retraining ensembles becomes resource-intensive. Federated learning emerges as a solution for privacy-preserving aggregation across institutions. Scalability challenges also involve storage for multiple models, prompting compression techniques like quantization.

- Data Privacy: Differential privacy in voting preserves patient confidentiality, masking individual contributions.

- Model Updates: Online learning incrementally refines weights without full retrains, adapting to new data streams.

- Hybrid Approaches: Combining with active learning queries uncertain samples, optimizing data efficiency.

Emerging Innovations

Advancements like Bayesian ensembles for uncertainty quantification and multimodal fusion with genomics promise next-gen AlzCLIP variants. Research explores self-supervised voter generation to automate diversity. Innovations may include quantum-inspired ensembles for faster computations or integration with AR for visualized diagnostics.

These directions aim to make AlzCLIP more accessible, potentially via cloud-based services for global reach.

Evaluation Metrics and Performance

Key Benchmarks for Ensembles

AUC-ROC, balanced accuracy, and Cohen’s kappa quantify ensemble superiority over baselines. AlzCLIP reports 92% AUC on held-out ADNI, outperforming single CLIP by 8%. These metrics account for class imbalance, with precision-recall curves highlighting performance in low-prevalence scenarios.

Benchmarks extend to real-world proxies like OASIS or UK Biobank datasets, testing generalizability beyond ADNI.

Comparative Studies

Versus non-ensemble CLIP, voting reduces variance by 20% in bootstrap tests. Peer models like NeuroCLIP lag in multi-site generalization, underscoring ensemble value. Studies often use ablation experiments, removing ensemble components to isolate gains, revealing how voting elevates baseline accuracies.

Comparative analyses also factor in inference speed, showing ensembles’ trade-offs are justified by diagnostic improvements.

Real-World Validation

Pilot studies in clinics validate 85% concordance with radiologists, with ensembles excelling in MCI detection where subtlety prevails. Real-world deployments involve prospective trials, tracking long-term outcomes like delayed institutionalization.

Feedback loops from clinicians refine ensembles, closing the gap between lab and bedside applications.

Conclusion

The voting-based ensemble in AlzCLIP fundamentally elevates Alzheimer’s diagnostics by harmonizing diverse models into cohesive, reliable predictions, tackling neuroimaging challenges with democratic precision. This approach not only amplifies accuracy and interpretability but also paves the way for scalable clinical adoption, potentially transforming patient outcomes through early, confident interventions