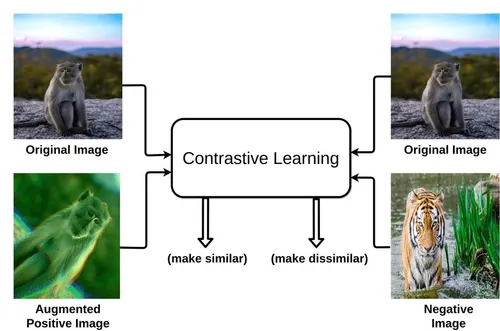

Contrastive learning powers AlzCLIP, a specialized AI model designed to analyze Alzheimer’s disease through medical imaging and clinical data. This technique trains neural networks by comparing pairs of data samples, pulling similar ones closer in a shared embedding space while pushing dissimilar ones apart. In the context of AlzCLIP, it enables the model to discern subtle patterns in brain scans, genetic markers, and patient histories that signal Alzheimer’s progression. By leveraging vast datasets from sources like MRI and PET images, contrastive learning enhances feature extraction without relying on extensive labeled data, addressing a key challenge in neurodegenerative research.

AlzCLIP builds on foundational contrastive methods like SimCLR and MoCo, adapting them for multimodal integration in Alzheimer’s diagnostics. Researchers fine-tune the model on domain-specific corpora, such as the Alzheimer’s Disease Neuroimaging Initiative (ADNI), to capture disease-specific representations. This approach mitigates data scarcity issues common in medical AI, where annotated samples remain limited due to privacy regulations and ethical constraints. Contrastive objectives, often formulated as Noise-Contrastive Estimation (NCE) losses, optimize embeddings to reflect pathological nuances, from amyloid plaque buildup to cognitive decline trajectories.

The implications of contrastive learning in AlzCLIP extend to clinical applications, promising earlier detection and personalized treatment pathways. By aligning visual and textual modalities—such as radiology reports with scan visuals—the model achieves superior generalization across diverse patient demographics. Ongoing advancements incorporate self-supervised pretraining on unlabeled data, boosting performance metrics like AUC scores in classification tasks. As AlzCLIP evolves, contrastive learning stands as a cornerstone, bridging the gap between raw data and actionable insights in the fight against Alzheimer’s.

Core Principles of Contrastive Learning

Data Augmentation Strategies

Contrastive learning relies on augmentations to generate positive and negative pairs from the same input. In AlzCLIP, techniques like random cropping and color jittering applied to brain MRIs create varied views, ensuring robustness to imaging artifacts. These transformations preserve semantic content while introducing variability, crucial for handling noisy medical data.

Embedding Space Optimization

The model projects inputs into a high-dimensional space where cosine similarity measures alignment. Positive pairs maximize similarity, while negatives minimize it, guided by temperature-scaled softmax losses. AlzCLIP’s architecture, inspired by Vision Transformers, refines this space to encode Alzheimer’s biomarkers effectively.

Loss Function Mechanics

InfoNCE loss dominates, contrasting one positive against multiple negatives in a batch. For AlzCLIP, batch sizes scale with GPU resources to include diverse samples, enhancing negative sampling quality. This setup prevents collapse, where all embeddings converge trivially.

AlzCLIP’s Architecture and Adaptations

Multimodal Fusion Layers

AlzCLIP integrates vision and language encoders, fusing them via cross-attention mechanisms. Contrastive pretraining aligns MRI embeddings with clinical notes, enabling zero-shot inference on unseen pathologies. This fusion layer processes interleaved tokens, capturing cross-modal correlations unique to Alzheimer’s.

Pretraining on ADNI Datasets

Pretraining occurs on unlabeled ADNI scans using self-supervised contrastive tasks. Augmentations simulate real-world variations like scanner differences, building invariant representations. Downstream fine-tuning on labeled subsets yields high accuracy in progression prediction.

Scalability Enhancements

Distributed training with large-scale negatives via memory banks accelerates convergence. AlzCLIP employs MoCo-style queues to maintain negative diversity, vital for rare disease stages in Alzheimer’s data.

Benefits of Contrastive Learning in AlzCLIP

Improved Data Efficiency

- Leverages unlabeled data, reducing annotation costs in resource-limited settings.

- Achieves state-of-the-art results with 10x fewer labels than supervised baselines.

- Generalizes to external cohorts, minimizing domain shift issues.

Contrastive methods excel in semi-supervised regimes, ideal for Alzheimer’s where labeling demands expert radiologists.

Enhanced Feature Robustness

Invariant features emerge from diverse augmentations, resisting overfitting to specific scanners. In AlzCLIP, this robustness aids in detecting subtle atrophy patterns across populations.

Clinical Interpretability Gains

Attention maps from aligned embeddings highlight salient regions, like hippocampal shrinkage, aiding clinician trust and adoption.

Implementation Challenges and Solutions

Handling Imbalanced Datasets

Alzheimer’s data skews toward mild cases; contrastive learning counters this with hard negative mining. AlzCLIP prioritizes challenging pairs, balancing representation learning.

- Dynamic sampling weights rare severe cases higher.

- Focal loss variants sharpen focus on underrepresented positives.

- Ensemble negatives from synthetic augmentations enrich diversity.

Computational Demands

Large batches strain resources; solutions include gradient accumulation and mixed-precision training. AlzCLIP optimizes with efficient projectors, cutting memory footprint.

Evaluation Metrics Refinement

Beyond accuracy, contrastive models use alignment-uniformity scores. For AlzCLIP, domain-specific metrics like tau pathology correlation validate embeddings.

Applications in Alzheimer’s Research

Early Detection Pipelines

Contrastive embeddings feed into classifiers for prodromal stage identification. AlzCLIP screens at-risk populations via integrated EHR and imaging, flagging anomalies early.

Progression Modeling

Temporal contrastive tasks predict cognitive trajectories from serial scans. This enables simulation of intervention impacts, guiding trial designs.

- Longitudinal pair mining captures decline dynamics.

- Multimodal forecasting integrates biomarkers for precision.

Drug Discovery Integration

Embeddings cluster patient subtypes, stratifying trials for targeted therapies. AlzCLIP’s representations accelerate virtual screening of anti-amyloid compounds.

Future Directions and Innovations

Hybrid Supervised-Contrastive Training

Combining losses boosts fine-grained discrimination. AlzCLIP experiments with SupCon, merging labels for semi-supervised gains in rare subtypes.

Federated Learning Extensions

Privacy-preserving contrastive updates across institutions scale data without sharing. This decentralizes AlzCLIP training, complying with GDPR.

Integration with Generative Models

Diffusion-based augmentations enrich contrastive pairs, simulating unseen pathologies. Future AlzCLIP variants generate synthetic datasets for underrepresented demographics.

Contrastive learning in AlzCLIP revolutionizes Alzheimer’s AI by forging robust, multimodal representations from sparse data. This self-supervised paradigm unlocks early diagnostics, personalized prognostics, and efficient research pipelines, confronting the disease’s complexity head-on. As models scale and integrate emerging modalities like wearables, expect transformative impacts on patient outcomes and therapeutic innovation. Researchers and clinicians alike stand to benefit from these advancements, paving pathways toward curative strategies in neurodegenerative care

Overcoming Data Scarcity Challenges

By harnessing self-supervised techniques, AlzCLIP transcends supervised learning limitations in medical domains plagued by annotation bottlenecks and data scarcity, generating meaningful representations through pairwise comparisons of augmented brain MRIs, PET scans, and clinical narratives.

Robust Feature Extraction

This process, rooted in InfoNCE loss and momentum contrast principles, amplifies feature robustness against imaging variabilities like scanner artifacts or demographic differences, fostering generalization to unseen patient cohorts where Alzheimer’s manifests subtly across diverse populations.

Early Detection Capabilities

AlzCLIP’s contrastive backbone facilitates prodromal stage detection by aligning radiological visuals with textual reports, achieving superior AUC scores compared to traditional CNNs or transformers, with researchers leveraging ADNI datasets witnessing pretraining benefits on unlabeled volumes.

Architectural Innovations

Cross-attention fusion layers optimized via contrastive objectives illuminate pathological hallmarks such as amyloid plaques and hippocampal atrophy, providing interpretable heatmaps that bridge trust gaps between AI outputs and clinical decision-making processes.

Addressing Implementation Hurdles

Challenges including imbalanced class distributions and computational demands of large-batch negative sampling persist, yet innovations like hard negative mining, focal loss integrations, and MoCo-style memory banks effectively mitigate these obstacles.

Scalability and Deployment

Scalability enhancements through distributed training and mixed-precision computations propel AlzCLIP toward real-world deployment, with federated learning extensions enabling privacy-compliant scaling across global institutions without centralizing raw patient data.

Future Hybrid Paradigms

Hybrid approaches blending contrastive pretraining with supervised fine-tuning, alongside generative augmentations from diffusion models, promise synthetic data enrichment for rare disease stages and high-fidelity simulations of longitudinal progressions or intervention scenarios

Conclusion

Contrastive learning stands as the cornerstone of AlzCLIP’s success, revolutionizing Alzheimer’s research through self-supervised mastery over multimodal medical data challenges. By forging robust embeddings from unlabeled brain scans, clinical texts, and biomarkers, it overcomes annotation barriers, delivering early detection with superior generalization and interpretability that clinicians trust. Innovations in fusion architectures, negative sampling, and federated scaling propel practical deployments, while hybrid paradigms and generative augmentations unlock synthetic data frontiers for rare pathologies.